The Rise of Digital Empathy: Can AI Understand Human Emotions?

Introduction

In the age of artificial intelligence (AI), the idea of machines understanding human emotions might seem like something out of a science fiction novel. Yet, as AI technology advances, the concept of digital empathy—where AI can recognize, interpret, and respond to human emotions—is becoming a reality. This blog explores whether AI can truly grasp human emotions or if it is only simulating empathy. We will examine the technologies driving digital empathy, its various applications, and the challenges and ethical considerations associated with this emerging field.

The Evolution of AI: From Basic Functions to Emotional Intelligence

From Early AI to Machine Learning

Initially, AI systems were designed to handle specific, straightforward tasks like calculations and data processing. These early systems operated based on rigid rules and could not adapt beyond their programmed functions. As technology progressed, AI evolved to include machine learning, allowing systems to learn from data and improve their performance over time. This leap enabled AI to handle more complex tasks, such as pattern recognition and predictive analytics.

Advancements in Emotion Recognition

Emotion recognition, or affective computing, represents the latest advancement in AI. This field focuses on developing systems that can understand human emotions by analyzing various signals such as facial expressions, vocal tones, and physiological responses. Unlike traditional AI, which relies on logic and data, emotion recognition aims to interpret the subtleties of human emotional states.

Key Technologies in Digital Empathy

Facial Recognition Technology

Facial recognition is a fundamental technology for digital empathy. Advanced algorithms analyze facial expressions to detect emotions. For example, a smiling face generally indicates happiness, while a furrowed brow might suggest confusion or frustration. Companies like Affectiva and Microsoft’s Azure Face API are leading the way in facial recognition technology, offering systems that can accurately identify a range of emotions.

Facial recognition systems work by mapping facial features and comparing them to a database of known expressions. These systems use deep learning to enhance their accuracy over time. This technology has applications in various fields, including marketing, where it helps gauge customer reactions to advertisements, and healthcare, where it monitors patients’ emotional states.

Voice Analysis

Voice analysis examines vocal characteristics such as pitch, tone, and speed to determine emotional states. Changes in voice patterns can reveal a lot about how someone feels. For instance, a trembling voice might indicate anxiety, while a calm, steady voice suggests contentment.

Companies like Beyond Verbal and Amazon’s Alexa use voice analysis to improve user interactions. By integrating this technology, these companies create more responsive and empathetic AI. Voice analysis is particularly useful in customer service, where understanding a customer’s emotional state can lead to better support and resolution of issues.

Text Analysis

Natural Language Processing (NLP) enables AI to analyze and understand written or spoken language, detecting sentiment and emotional tone. By examining word choice, sentence structure, and even punctuation, AI can infer the emotional context of communication.

Text analysis is commonly used in social media monitoring, where AI tools analyze user posts and comments to gauge public sentiment. This technology also has applications in mental health support, where AI chatbots can detect signs of distress or depression and provide appropriate responses.

Biometric Sensors

Biometric sensors, such as wearables and physiological monitors, provide real-time data on physical responses associated with emotions. Changes in heart rate, skin conductance, and body temperature can indicate emotional states like stress or excitement.

Wearable devices, such as smartwatches and fitness trackers, collect biometric data that enhances AI’s understanding of emotional states. By integrating biometric sensors with AI systems, developers create more accurate and responsive emotional recognition technologies.

Applications of Digital Empathy

Mental Health

Digital empathy has significant potential in mental health care. AI-driven tools can offer early detection of emotional distress and provide support for individuals dealing with mental health issues. For instance, AI chatbots can engage users in conversations, detect signs of anxiety or depression, and offer resources or therapeutic interventions.

Research is underway to develop AI systems that provide personalized mental health support based on individual emotional needs. These systems could complement traditional therapy, offering additional layers of support and accessibility.

Customer Service

In customer service, digital empathy can improve interactions by understanding customer emotions. For example, an AI chatbot that detects frustration in a customer’s voice might escalate the issue to a human agent or offer a more tailored solution.

Incorporating digital empathy into customer service can enhance customer satisfaction, reduce churn, and build stronger relationships with clients. By addressing emotional cues, companies can offer more personalized and effective service.

Education

Digital empathy can also enhance educational experiences by providing real-time feedback on students’ emotional engagement. AI systems can monitor students’ reactions to educational content and adjust teaching methods accordingly. For example, if a student appears confused or frustrated, the AI could offer additional resources or modify the presentation to better meet their needs.

This approach can lead to more personalized learning experiences, improving student outcomes and fostering a more supportive educational environment.

Companionship

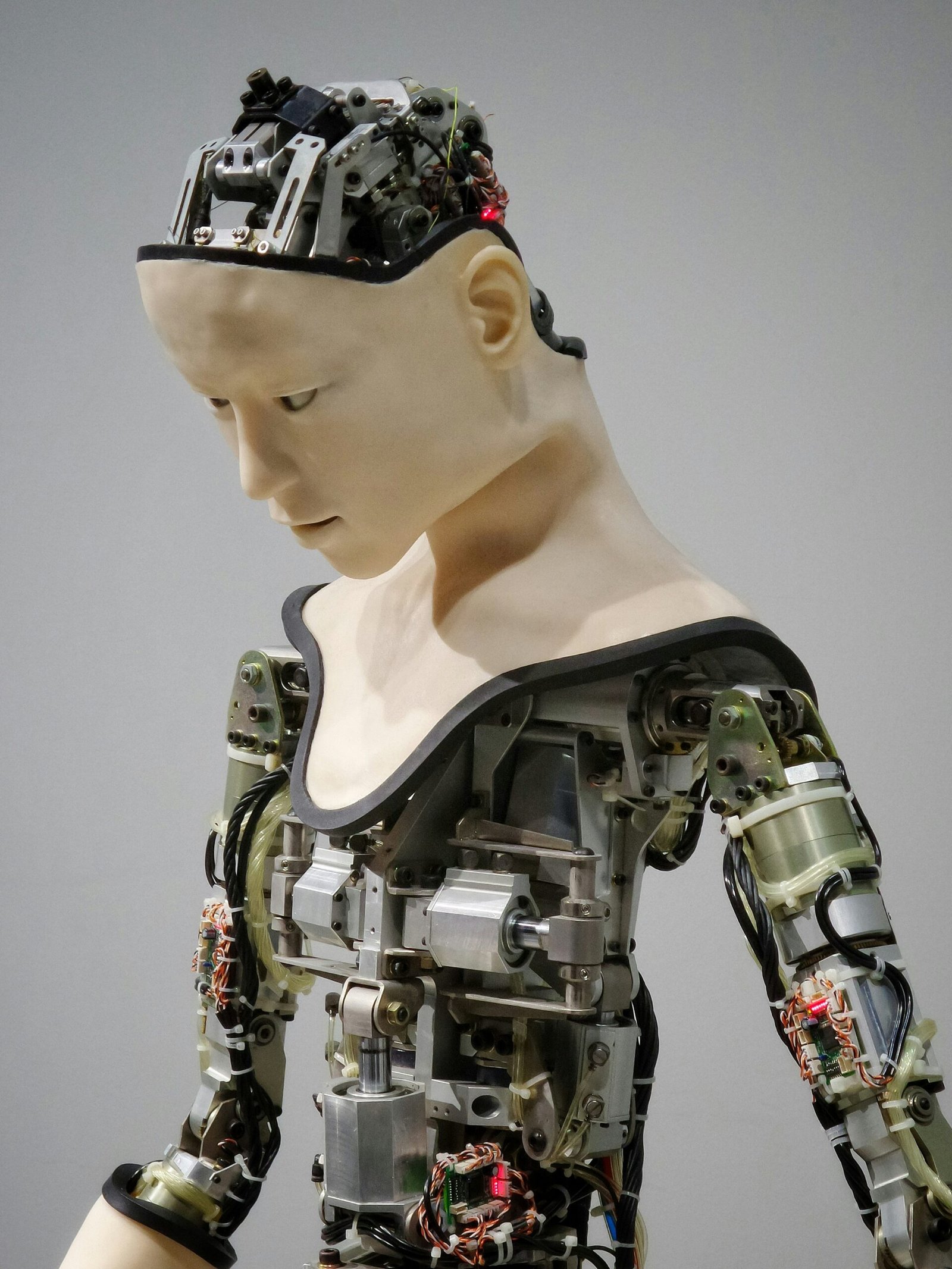

Companion robots for the elderly and individuals with special needs can benefit from digital empathy. These robots can recognize when a person is feeling lonely or distressed and respond with comforting behaviors or initiate social interactions. By providing emotional support and companionship, these robots can enhance quality of life and reduce feelings of isolation.

Human-Robot Interaction

In sectors like healthcare and retail, robots equipped with digital empathy can improve human-robot interactions. For instance, a robot in a hospital setting that understands when a patient is in pain could adjust its behavior to offer comfort or alert medical staff. Similarly, in retail, empathetic robots can create more engaging and personalized shopping experiences for customers.

Challenges and Ethical Considerations

Authenticity of Digital Empathy

A significant challenge is the authenticity of digital empathy. Can AI truly understand emotions, or is it merely simulating empathy? Critics argue that AI lacks the consciousness and subjective experience necessary for genuine emotional comprehension. This raises questions about the depth and authenticity of interactions with empathetic AI.

Privacy Concerns

The collection and analysis of sensitive data, such as facial expressions, voice recordings, and biometric signals, raise privacy concerns. Ensuring that this data is handled securely and ethically is crucial. Users must be informed about how their data is used and have control over their personal information.

Bias in Emotion Recognition

Emotion recognition algorithms can be biased, leading to inaccurate or unfair interpretations of emotions. For example, cultural differences in emotional expression may not be accurately recognized by AI systems trained on specific demographic data. This can result in miscommunication and potential discrimination.

Over-Reliance on AI

There is a risk that people may become overly reliant on AI for emotional support, which could diminish human-to-human interactions. This could lead to a society where individuals prefer interacting with machines over building genuine, human connections.

Ethical Implications

As AI systems become more involved in sensitive areas such as mental health care, ethical considerations must be addressed. Questions about accountability, consent, and the potential impact of AI-driven decisions on individuals’ well-being need careful consideration.

The Future of Digital Empathy

The future of digital empathy is promising yet complex. As AI technology continues to evolve, its ability to understand and respond to human emotions will likely improve. This could lead to more natural and effective interactions between humans and machines, enhancing various aspects of life, from customer service to mental health support.

However, addressing the challenges and ethical concerns associated with digital empathy will be essential. Developers and policymakers must work together to ensure that these technologies are used responsibly and beneficially. This includes implementing robust privacy protections, addressing biases, and considering the broader societal impacts of empathetic AI.

Conclusion

Digital empathy represents a significant milestone in AI development. As machines become more capable of understanding and responding to human emotions, they have the potential to improve our interactions and enhance various aspects of life. However, it is crucial to navigate this technology carefully, balancing its benefits with ethical considerations. The journey toward developing truly empathetic AI is ongoing and complex, but it holds the promise of a future where technology can better understand and support our emotional needs.