Meta’s Strategic Move: Training AI Models on European Data to Address Privacy Concerns

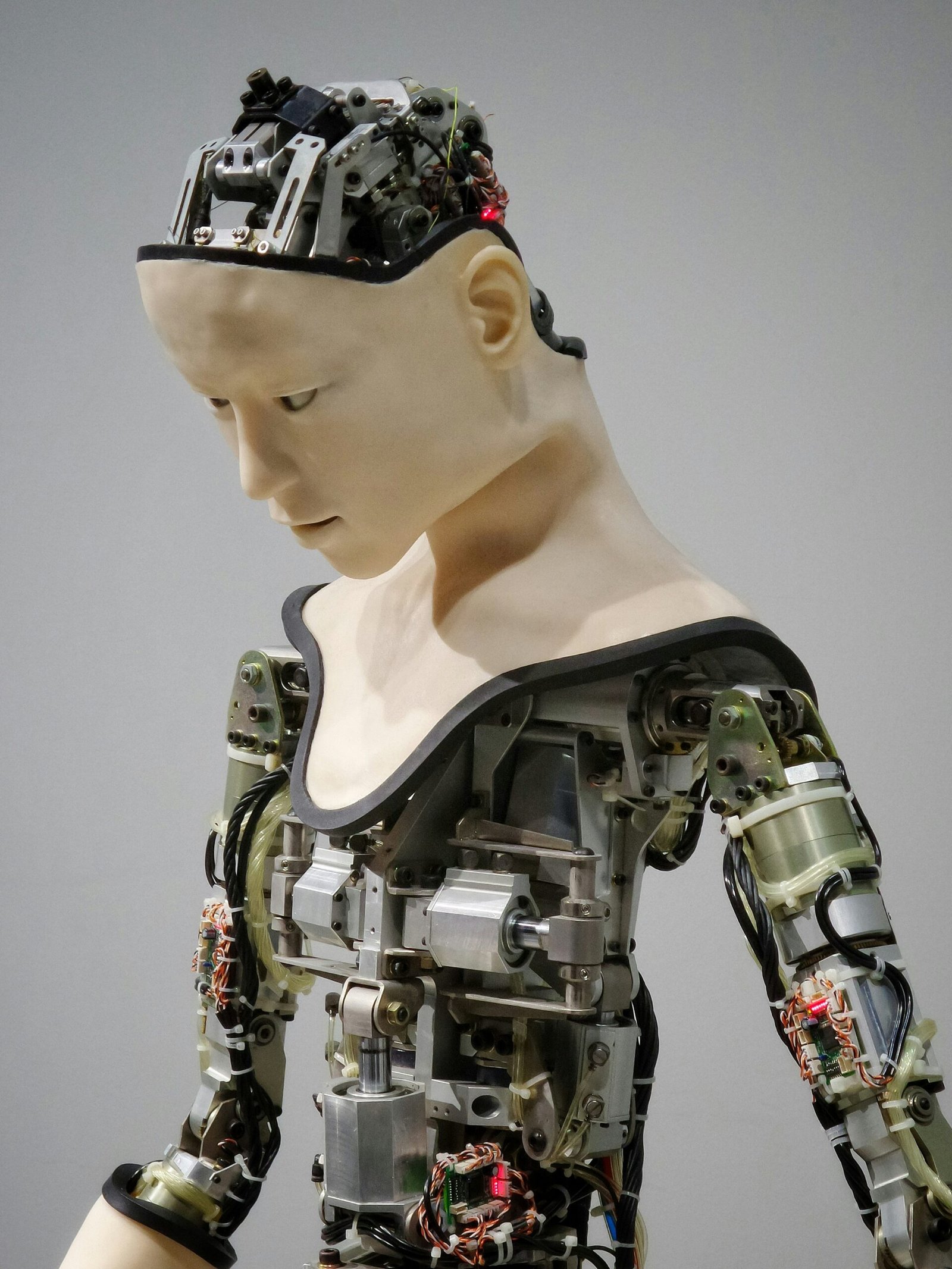

Meta, formerly known as Facebook, has embarked on a groundbreaking initiative aimed at enhancing its artificial intelligence (AI) models by leveraging European data. This strategic move is a direct response to the escalating privacy concerns and the stringent regulatory landscape in Europe. By focusing on European data, Meta seeks to align its AI development practices with regional privacy standards, thereby fostering trust and compliance.

The initiative represents a significant shift in Meta’s approach to AI training. Historically, the company has faced criticism regarding its data handling practices, especially in relation to user privacy. The new strategy aims to address these concerns by ensuring that AI models are developed and trained with a heightened awareness of privacy implications. This not only helps in meeting regulatory requirements but also demonstrates Meta’s commitment to ethical AI development.

In the broader context of AI development, privacy has emerged as a paramount issue. As AI technologies become more pervasive, the need to protect personal data and ensure transparency in data usage has never been more critical. European regulations, such as the General Data Protection Regulation (GDPR), set a high bar for data protection and have influenced global standards. Meta’s initiative to train AI models on European data is a proactive measure to comply with these regulations and to mitigate privacy risks.

Furthermore, this initiative underscores a broader trend in the tech industry, where companies are increasingly prioritizing data privacy in their AI strategies. By focusing on European data, Meta not only aims to enhance the performance and reliability of its AI models but also to build a foundation of trust with its users. This approach is likely to set a precedent for other tech giants, highlighting the importance of integrating privacy considerations into AI development processes.

In summary, Meta’s new initiative to train AI models on European data marks a pivotal step in addressing privacy concerns and regulatory challenges. This strategic decision reflects the company’s commitment to ethical AI practices and sets the stage for a more privacy-conscious approach to AI development.

The Importance of European Data in AI Development

European data holds significant importance in the realm of AI development, primarily due to the stringent privacy and data protection standards enforced by the General Data Protection Regulation (GDPR). GDPR is a comprehensive regulation that mandates robust data privacy measures, ensuring that individuals have greater control over their personal information. By adhering to GDPR guidelines, companies like Meta can build AI models that prioritize user privacy, thereby fostering trust among users.

Utilizing European data offers multiple advantages, particularly in enhancing the robustness and fairness of AI models. European data is often more diverse and inclusive, reflecting a wide array of demographic factors such as age, gender, ethnicity, and socioeconomic status. This diversity is crucial for training AI models that are not only more effective but also less biased. The robust nature of such data ensures that the AI models are more resilient and capable of performing accurately across different contexts and scenarios.

Furthermore, the high standards set by GDPR compel organizations to implement advanced data protection mechanisms, which in turn, leads to the development of more secure AI systems. These systems are designed to minimize risks associated with data breaches and unauthorized access, thereby safeguarding user information. The rigorous data protection environment in Europe thus serves as a benchmark for companies striving to achieve high levels of data privacy and security.

Incorporating European data in AI development also helps in meeting the ethical and legal obligations that are increasingly becoming a priority for tech companies worldwide. By aligning with GDPR, organizations can ensure that their AI technologies are compliant with international data protection laws, thereby avoiding legal repercussions and fostering global acceptance.

In summary, European data is invaluable for AI development, offering a blend of diversity, robustness, and stringent privacy standards. The integration of such data enables the creation of more reliable, fair, and secure AI models, paving the way for ethical and user-centric technological advancements.

Current Privacy Concerns with AI

The advent of artificial intelligence (AI) has brought substantial advancements across various sectors, yet it has simultaneously introduced significant privacy concerns. One of the primary issues is the occurrence of data breaches, where unauthorized access to personal data can result in severe consequences for both individuals and companies. Meta, formerly known as Facebook, has faced a series of high-profile data breaches in the past, highlighting the vulnerabilities within its systems and the broader implications for user privacy.

Surveillance is another critical concern associated with AI technology. The capability of AI to process vast amounts of data enables extensive monitoring and tracking of individuals’ activities. This potential for pervasive surveillance has raised alarms among privacy advocates, who argue that it can lead to the erosion of personal freedoms and the abuse of power. Meta’s use of AI for targeted advertising has particularly come under scrutiny, as it involves the collection and analysis of vast troves of personal information to predict and influence user behavior.

Moreover, the misuse of personal information remains a persistent issue. AI systems often require large datasets to function optimally, which can include sensitive personal information. If not handled with strict data governance and ethical considerations, this information can be exploited, leading to privacy violations. The Cambridge Analytica scandal, where data from millions of Facebook users was harvested without consent for political advertising purposes, serves as a stark example of the potential misuse of personal data by AI-driven platforms.

These privacy concerns underscore the need for robust regulatory frameworks and ethical guidelines to govern the use of AI. As tech companies like Meta continue to develop and deploy AI technologies, addressing these privacy issues becomes paramount. Ensuring that users’ data is protected and that AI systems are transparent and accountable is essential for maintaining public trust and advancing the responsible development of AI.

Meta’s Approach to Data Privacy

Meta, in its commitment to address privacy concerns, has adopted a multi-faceted approach to data privacy, particularly in the context of training its AI models. A cornerstone of Meta’s strategy is the implementation of data anonymization techniques. Data anonymization involves modifying personal data so that individuals cannot be readily identified. This method not only ensures user privacy but also aligns with the stringent requirements of the General Data Protection Regulation (GDPR).

Another critical measure in Meta’s approach is secure data storage. Meta employs advanced encryption methods to safeguard data both at rest and in transit. This ensures that data is protected from unauthorized access, maintaining its integrity and confidentiality. The organization also adopts best practices in cybersecurity to continuously monitor and defend against potential threats.

Compliance with GDPR is a key aspect of Meta’s data privacy strategy. GDPR mandates rigorous standards for data protection and user privacy within the European Union. Meta has implemented robust data governance frameworks to ensure adherence to these regulations. This includes conducting regular audits, maintaining detailed documentation, and providing transparent communication with stakeholders regarding data processing activities.

In addition to these foundational measures, Meta is also exploring new technologies and methodologies to enhance data privacy and user trust. One such technology is federated learning, which allows AI models to be trained across decentralized devices holding local data samples, without the data being exchanged or stored centrally. This method significantly reduces the risk of data breaches and enhances user privacy.

Moreover, Meta is investing in differential privacy techniques. Differential privacy adds a controlled amount of noise to data sets, making it difficult to identify individual data points while still allowing for accurate analysis. This technique provides a robust balance between data utility and privacy.

Through these comprehensive measures, Meta aims to foster a secure and trustworthy environment for users while advancing its AI capabilities. By prioritizing data privacy, Meta not only complies with regulatory standards but also reinforces its commitment to safeguarding user information in the evolving digital landscape.

Collaboration with European Institutions

Meta’s strategic approach to training AI models on European data necessitates robust collaboration with various European institutions. By partnering with regulatory bodies, Meta aims to ensure compliance with the General Data Protection Regulation (GDPR) and other local privacy laws. These collaborations are vital for aligning AI development with stringent European privacy standards and building trust among users and stakeholders.

One significant aspect of this collaboration involves working closely with academic institutions across Europe. Meta has initiated several research projects with universities and research centers to explore innovative solutions for privacy-preserving AI. These projects focus on developing cutting-edge techniques, such as federated learning and differential privacy, which enable the training of AI models without compromising individual data privacy. By integrating academic expertise, Meta can ensure that its AI technologies are at the forefront of ethical and privacy-focused innovation.

Moreover, Meta is establishing partnerships with industry leaders and tech companies within Europe. These cooperative initiatives aim to foster a shared understanding of best practices in AI development and privacy protection. Through industry consortia and joint ventures, Meta and its partners can collectively address the challenges and opportunities presented by AI, ensuring that the technology evolves in a manner that respects European privacy principles.

Meta’s engagement with European regulatory bodies is also a critical component of its collaborative strategy. By maintaining an open dialogue with entities like the European Data Protection Board (EDPB) and national data protection authorities, Meta seeks to stay ahead of regulatory changes and proactively address any compliance issues. This continuous interaction not only helps Meta align its AI practices with current regulations but also contributes to shaping future policies that govern AI development and data privacy in Europe.

Through these multifaceted collaborations, Meta demonstrates its commitment to developing AI in a manner that prioritizes user privacy and adheres to European standards. By leveraging the combined expertise of regulatory bodies, academic institutions, and industry partners, Meta aims to create AI technologies that are both innovative and respectful of privacy rights.

Impact on Users and Businesses

Meta’s strategic decision to train AI models on European data while prioritizing privacy is poised to have significant implications for both users and businesses. For users, the enhanced privacy measures are likely to foster increased trust and engagement. As privacy concerns have become a pivotal issue for many individuals, Meta’s commitment to better data protection can reassure users that their personal information is being handled responsibly. This could result in higher user retention and more active participation on Meta’s platforms, as individuals feel safer sharing and interacting online.

For businesses, particularly those that rely heavily on Meta’s platforms for advertising and data analytics, the initiative presents both opportunities and challenges. On one hand, businesses can benefit from a more engaged and trusting user base, which could translate to more effective advertising campaigns and higher conversion rates. Enhanced user privacy can also lead to improved data quality, as users are more likely to provide accurate information when they trust the platform.

On the other hand, stricter privacy measures may limit the amount of data available for analytics, which can pose a challenge for businesses that depend on granular data insights. Companies may need to adapt their strategies to work with aggregated or anonymized data, which could require new tools and methodologies. Additionally, businesses might face increased compliance requirements to align with Meta’s enhanced privacy standards, potentially leading to higher operational costs.

Overall, while Meta’s focus on privacy is a positive step towards addressing user concerns and aligning with European regulations, businesses must navigate the evolving landscape carefully. By embracing these changes and adapting their practices accordingly, companies can continue to thrive while respecting user privacy and maintaining regulatory compliance.

Challenges and Criticisms

Meta’s initiative to train AI models on European data to address privacy concerns is a bold and innovative step. However, it is not without its challenges and criticisms. One of the foremost technical challenges lies in implementing robust privacy measures that comply with stringent European regulations, such as the General Data Protection Regulation (GDPR). Ensuring that AI models can effectively anonymize and protect user data while still delivering useful insights is a complex task that requires advanced algorithms and continuous monitoring.

Skepticism from users and regulators also presents a significant hurdle. Users, already wary of data breaches and misuse, may doubt the efficacy of Meta’s privacy promises. Regulators, on the other hand, are likely to scrutinize the methods and technologies employed to ensure compliance with privacy laws. This skepticism could lead to increased regulatory oversight and potential legal challenges, further complicating Meta’s efforts.

Balancing innovation with privacy is another critical aspect of this initiative. While leveraging European data can lead to the development of more sophisticated and accurate AI models, there is an inherent tension between pushing the boundaries of technology and safeguarding user privacy. Striking the right balance is essential to gain user trust and regulatory approval.

On the positive side, this initiative demonstrates Meta’s commitment to addressing privacy concerns, which could enhance its reputation and user trust in the long run. Proactively engaging with regulators and transparently communicating their privacy measures can also foster a more cooperative environment. Furthermore, successfully navigating these challenges could position Meta as a leader in privacy-conscious AI development, setting a benchmark for the industry.

In conclusion, while Meta’s initiative to train AI models on European data to address privacy concerns is fraught with challenges and criticisms, it also presents an opportunity for the company to innovate responsibly and lead the way in privacy-first AI development. The road ahead is undoubtedly complex, but with careful planning and execution, Meta can turn these challenges into strengths.

Future Outlook and Conclusion

Meta’s strategic initiative to train AI models on European data marks a significant step towards reconciling advanced AI development with stringent privacy standards. By focusing on European data, Meta is not only adhering to the General Data Protection Regulation (GDPR) but also setting a precedent for how global tech companies can harmonize innovation with regulatory compliance. This approach could potentially mitigate privacy concerns that have plagued the tech industry, fostering greater trust among users and regulators alike.

Long-term, this move is poised to deliver substantial benefits. Enhanced privacy measures could lead to more robust AI models that respect user data while still providing high-performance results. The balance between data utility and privacy could become a benchmark for future AI development, influencing other tech companies to adopt similar practices. As these companies witness the successful integration of privacy within AI frameworks, it may prompt a broader industry shift towards more responsible data handling practices.

The ripple effects of Meta’s strategy could extend beyond compliance and trust. By prioritizing European data, Meta may also unlock new avenues for AI innovation. Diverse and region-specific data sets can enrich the training process, potentially leading to AI solutions that are more adaptable and culturally aware. This focus on localized data could enhance the relevance and effectiveness of AI applications, making them more attuned to the needs of varied user demographics.

In conclusion, Meta’s commitment to training AI models on European data represents a forward-thinking approach to navigating the complex landscape of data privacy and technological advancement. This initiative underscores the importance of aligning AI development with robust privacy standards, setting a new bar for the industry. As other tech companies observe the benefits of this strategy, it could herald a new era where innovation and privacy are not mutually exclusive but are instead complementary forces driving the future of technology.