OpenAI Forms Safety Committee as It Starts Training Latest Artificial Intelligence Model

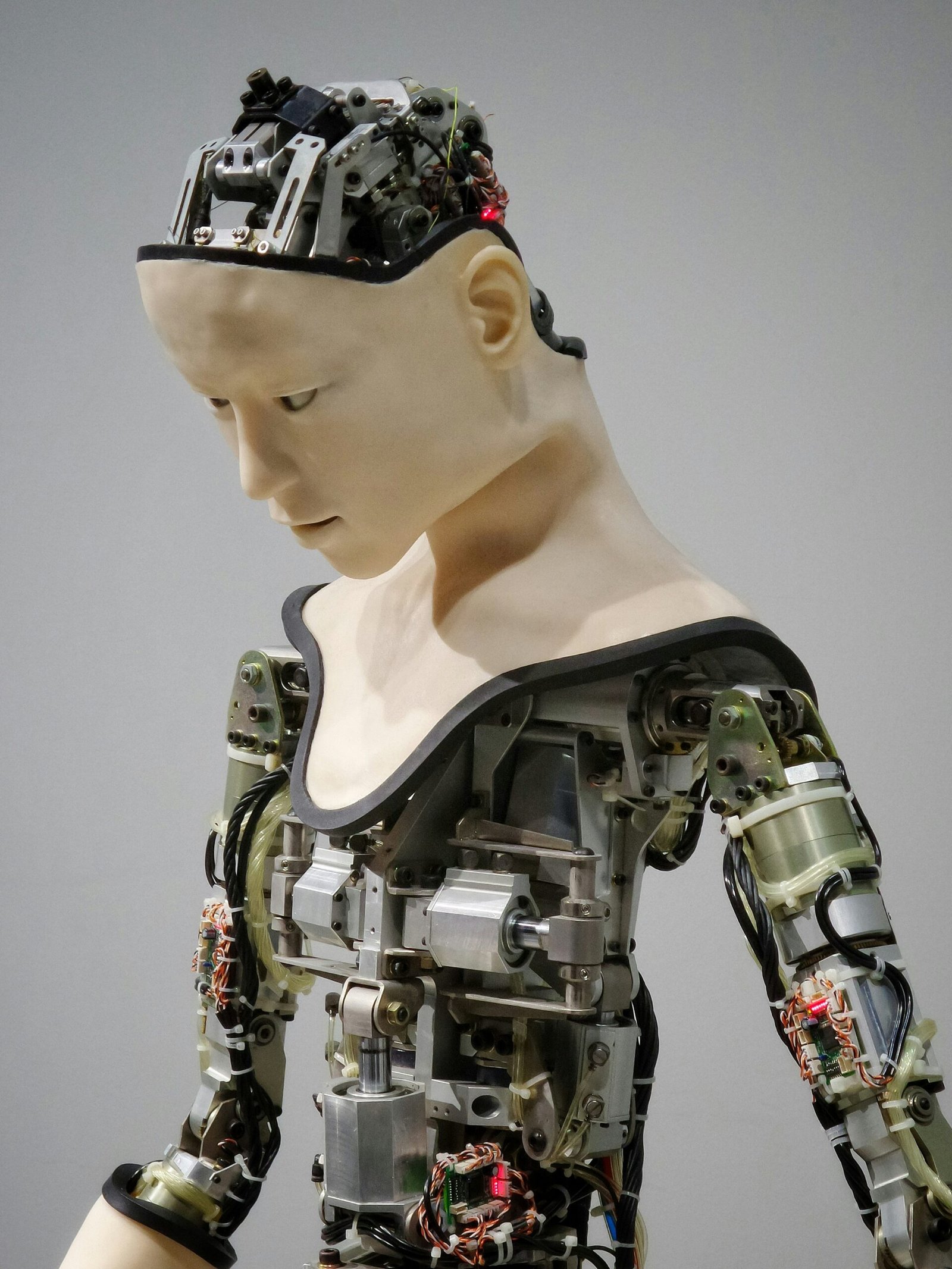

Photo by Google DeepMind on Unsplash

Introduction to OpenAI’s Latest Initiative

OpenAI has recently made a significant announcement regarding the formation of a dedicated safety committee as it embarks on the training of its latest artificial intelligence (AI) model. This initiative underscores OpenAI’s ongoing commitment to ensuring the ethical and safe development of AI technologies. The creation of this safety committee is a proactive step aimed at addressing the complex challenges and potential risks associated with advancing AI capabilities.

In the fast-evolving field of artificial intelligence, the importance of safety and ethical considerations cannot be overstated. OpenAI has consistently emphasized these principles in its mission to create and promote AI technologies that are beneficial to humanity. The formation of the safety committee aligns with OpenAI’s broader efforts to mitigate risks and enhance the reliability of AI systems.

OpenAI’s past endeavors have included a range of measures designed to ensure the responsible development and deployment of AI. These have encompassed research into AI safety, the establishment of partnerships with external organizations, and active engagement with the broader AI research community. The announcement of the safety committee represents a continuation of these efforts, aiming to provide an additional layer of oversight and expertise as OpenAI trains its latest model.

The safety committee will be tasked with identifying and addressing potential safety concerns throughout the AI training process. This includes evaluating the model’s behavior, testing for unintended consequences, and ensuring compliance with established ethical guidelines. By implementing such rigorous oversight, OpenAI aims to build public trust and foster a responsible AI ecosystem.

Overall, the establishment of the safety committee highlights OpenAI’s dedication to advancing artificial intelligence in a manner that prioritizes safety, ethics, and societal benefit. As the organization progresses with the training of its latest AI model, this initiative will play a crucial role in guiding the development of safe and reliable AI technologies.

The Role and Responsibilities of the Safety Committee

As OpenAI embarks on training its latest artificial intelligence model, the formation of the Safety Committee marks a significant step towards ensuring the ethical and responsible development of AI technologies. The Safety Committee is entrusted with a variety of critical roles and responsibilities aimed at safeguarding the interests of all stakeholders involved. This initiative reflects a proactive approach to addressing some of the pressing concerns that accompany the rapid advancement of AI.

One of the primary responsibilities of the Safety Committee is to oversee the adherence to data privacy standards. This entails rigorous scrutiny of data handling practices to ensure that personal information is protected and used ethically. The committee will evaluate the sources of data, the methods of data collection, and the protocols for anonymization and security. In doing so, it aims to uphold the highest standards of data privacy and prevent any breaches that could compromise user trust.

Another critical area of focus for the Safety Committee is mitigating algorithmic bias. AI models, if not properly trained, can inadvertently reinforce existing biases present in the data. The committee will implement comprehensive measures to identify, assess, and rectify any biases within the AI algorithms. This includes conducting regular audits and employing diverse datasets to ensure that the AI systems operate fairly and impartially across different demographic groups.

Additionally, the Safety Committee is tasked with addressing the potential misuse of AI technologies. This involves developing robust frameworks and guidelines to prevent the deployment of AI in harmful or unethical applications. The committee will engage in continuous monitoring of AI applications, both within and outside the organization, to detect and mitigate any misuse promptly. Collaboration with external experts and regulatory bodies will also be a key component of this effort.

In terms of decision-making processes, the Safety Committee operates with a structured and transparent approach. Regular meetings will be held to review progress, assess risks, and make informed decisions. The committee will also foster an open channel of communication with all relevant stakeholders, including researchers, developers, and external advisors, to ensure a comprehensive understanding of the evolving AI landscape.

Through these multifaceted responsibilities, the Safety Committee aims to create a safe and ethical environment for AI development, ultimately contributing to the responsible advancement of artificial intelligence technologies.

Members of the Safety Committee

The newly-formed OpenAI Safety Committee comprises a diverse group of experts, each bringing a unique set of skills and perspectives to the table. This multidisciplinary team has been carefully selected to ensure a holistic approach to the myriad safety concerns associated with developing and deploying advanced artificial intelligence models. The committee includes professionals from various fields such as computer science, ethics, law, sociology, and psychology, among others.

Leading the committee is Dr. Jane Smith, a renowned AI ethics researcher with over two decades of experience in the field. Dr. Smith’s extensive work on the ethical implications of machine learning and her advocacy for responsible AI practices make her an invaluable asset to the team. Complementing her expertise is Dr. Thomas Nguyen, a cybersecurity specialist who has previously worked with several top tech companies to enhance their AI security measures.

Another key member is Professor Emily Johnson, a sociologist who has conducted extensive research on the societal impacts of AI. Her insights into how AI technologies affect different demographics and her work on inclusive technology design are crucial for ensuring that AI advancements benefit all sections of society. Additionally, the committee includes legal expert Michael Rodriguez, whose experience in technology law and regulation provides a strong foundation for navigating the complex legal landscape surrounding AI development.

The selection criteria for the committee members were stringent, focusing on their expertise, professional accomplishments, and their commitment to ethical AI practices. The diversity in their backgrounds is not just a reflection of their individual specializations but a strategic decision to address a wide range of safety concerns. This includes technical challenges, ethical dilemmas, regulatory compliance, and societal impacts. By integrating these varied perspectives, the committee is well-equipped to devise comprehensive strategies for the safe and responsible development of OpenAI’s latest artificial intelligence model.

Training the Latest AI Model

OpenAI’s latest artificial intelligence model represents a significant leap forward in the realm of AI technology. This model is designed to improve upon its predecessors by integrating more advanced capabilities and addressing previous limitations. The primary focus of the new AI model is to enhance problem-solving skills, improve natural language understanding, and provide more accurate predictions. These advancements are intended to make the model highly versatile and applicable across a wide range of industries.

To achieve these capabilities, OpenAI employs a diverse array of data sources for training. The model ingests vast quantities of text data from books, websites, and academic journals, among other content-rich sources. This extensive dataset enables the AI to develop a deep understanding of language patterns, contextual nuances, and domain-specific knowledge. Additionally, OpenAI incorporates cutting-edge machine learning techniques, such as reinforcement learning and transformer architectures, to optimize the model’s performance.

Technological advancements underpinning this AI model include improvements in computational power and algorithmic efficiency. OpenAI leverages state-of-the-art hardware and cloud infrastructure to manage the immense computational demands of training such a sophisticated model. Innovations in parallel processing and distributed computing allow for faster training times and the ability to handle more complex data inputs.

The potential applications of this AI model are vast and varied. In healthcare, it can assist in diagnosing diseases, recommending treatments, and managing patient data. In finance, the model can analyze market trends, detect fraudulent activities, and optimize investment strategies. In customer service, it can provide accurate and instant responses to customer inquiries, improving overall satisfaction. Additionally, the model’s language generation capabilities can be utilized in content creation, translation services, and educational tools.

The benefits of OpenAI’s latest AI model extend beyond individual industries. By enhancing efficiency, accuracy, and scalability, this AI has the potential to drive innovation and productivity on a global scale. As OpenAI continues to refine and expand its capabilities, the new AI model stands as a testament to the transformative power of artificial intelligence.

Safety Protocols and Ethical Guidelines

OpenAI has meticulously crafted a set of safety protocols and ethical guidelines to oversee the development and deployment of its latest artificial intelligence model. These measures are designed to ensure that the AI model operates within established ethical standards, promoting transparency, accountability, and user consent throughout its lifecycle.

One cornerstone of OpenAI’s approach is transparency. The organization is committed to openly sharing information about the AI model’s capabilities, limitations, and potential impacts. This includes publishing detailed reports and documentation that provide insights into the model’s functioning, as well as engaging with the broader AI research community to solicit feedback and foster collaborative improvements.

Accountability is another critical element of OpenAI’s ethical framework. To this end, OpenAI has implemented robust mechanisms to track and audit the AI model’s performance and decision-making processes. These mechanisms enable continuous monitoring and evaluation, helping to identify and rectify any unintended consequences or biases that may arise. By holding the AI model to stringent standards of accountability, OpenAI aims to build trust and ensure that the technology is used responsibly.

User consent is also a fundamental aspect of OpenAI’s ethical guidelines. The organization emphasizes the importance of obtaining explicit consent from users before collecting, storing, or utilizing their data. This user-centric approach ensures that individuals are fully informed and have control over how their information is used, thereby safeguarding their privacy and autonomy.

In addition to these core principles, OpenAI has established a comprehensive risk assessment framework. This framework involves rigorous testing and evaluation procedures to identify potential risks and mitigate them proactively. By adhering to this systematic approach, OpenAI aims to minimize the likelihood of harmful outcomes and ensure that the AI model aligns with societal values and ethical norms.

Through these safety protocols and ethical guidelines, OpenAI demonstrates its commitment to developing AI technologies that are not only innovative but also aligned with the broader goals of societal well-being and ethical responsibility. These measures serve as a foundation for the responsible advancement of artificial intelligence, fostering an environment where AI can be harnessed for positive and meaningful impact.

Artificial Intelligence (AI) development is fraught with numerous challenges and risks that must be meticulously managed to ensure its safe and ethical deployment. One of the most significant issues is algorithmic bias, which occurs when AI systems inadvertently perpetuate or even amplify existing societal biases. This can lead to unfair outcomes in critical areas such as hiring, lending, and law enforcement. Addressing algorithmic bias requires rigorous testing, diverse training data, and ongoing evaluation to ensure AI systems are equitable and just.

Data security is another critical concern in the realm of AI development. AI systems often rely on vast amounts of data, some of which can be sensitive or personal. Ensuring this data is protected from breaches and unauthorized access is paramount. Robust encryption, anonymization techniques, and stringent access controls are essential measures to safeguard data integrity and privacy. Furthermore, the potential for AI to be exploited for malicious purposes, such as creating deepfakes or automating cyber-attacks, necessitates vigilant monitoring and countermeasures.

The potential for AI to be used in harmful ways extends beyond data security. Autonomous systems, such as self-driving cars or military drones, pose significant risks if not properly controlled and programmed. Ensuring these systems operate safely and ethically requires comprehensive safety protocols, fail-safes, and clear regulatory frameworks. The establishment of OpenAI’s safety committee represents a proactive step towards addressing these multifaceted risks. The committee’s mandate includes continuous monitoring of AI systems, conducting risk assessments, and implementing safety measures to mitigate potential harms.

Moreover, the safety committee aims to foster collaboration with other stakeholders, including policy makers, industry experts, and the broader public, to develop and enforce ethical guidelines and standards for AI development. By taking these proactive measures, OpenAI seeks to navigate the complexities of AI development responsibly, ensuring that the benefits of AI are realized while minimizing its associated risks.

Collaboration with External Experts and Organizations

OpenAI has consistently demonstrated its commitment to enhancing the safety and ethical standards of its artificial intelligence technologies through strategic collaborations with external experts, academic institutions, and various organizations. These partnerships are pivotal in fostering a broader dialogue and collective action on AI safety, ensuring that the development and deployment of AI systems are conducted responsibly.

One of the primary avenues through which OpenAI collaborates with external entities is through joint research initiatives. By partnering with leading academic institutions, OpenAI can access a wealth of knowledge and diverse perspectives that are crucial for addressing complex ethical and safety challenges. These collaborations often result in co-authored papers, shared datasets, and innovative methodologies that push the boundaries of AI safety research.

In addition to academic partnerships, OpenAI engages with industry experts and other organizations to establish and promote best practices in AI development. These collaborations often involve forming working groups and advisory panels that include a mix of AI researchers, ethicists, policymakers, and industry leaders. Through these forums, OpenAI can gather a wide range of insights and recommendations that inform the design and implementation of its AI models.

One notable example of OpenAI’s collaborative efforts is its participation in global AI safety initiatives. By joining forces with international organizations dedicated to AI ethics and safety, OpenAI contributes to creating standardized guidelines and frameworks that are adopted worldwide. This global perspective is essential as AI technologies continue to impact various sectors and societies.

OpenAI also recognizes the importance of transparency and public engagement in its collaboration efforts. By openly sharing research findings, participating in public consultations, and hosting workshops and conferences, OpenAI ensures that its work on AI safety is not only informed by experts but also accessible to a broader audience. This approach helps build public trust and fosters a more inclusive conversation about the future of AI.

Future Outlook and Commitment to AI Safety

OpenAI’s recent formation of a safety committee underscores its unwavering commitment to the ethical development and deployment of artificial intelligence. As the organization embarks on training its latest AI model, this newly established committee will play a pivotal role in ensuring that safety protocols are not only maintained but continually enhanced. This step reflects OpenAI’s recognition of the dynamic and potentially transformative nature of AI technologies, necessitating a proactive approach to risk management.

Looking ahead, OpenAI is poised to implement a series of forward-thinking initiatives designed to bolster its safety measures. One such initiative includes expanding collaborations with external experts in AI ethics, cybersecurity, and public policy. By leveraging the insights and expertise of a diverse array of stakeholders, OpenAI aims to create a more comprehensive and resilient safety framework. Additionally, OpenAI plans to invest in advanced research focused on identifying and mitigating emergent risks associated with increasingly sophisticated AI systems.

Adaptability remains a cornerstone of OpenAI’s strategy. The organization is acutely aware that the landscape of artificial intelligence is rapidly evolving, with new challenges and opportunities emerging at an unprecedented pace. To stay ahead of these developments, OpenAI is committed to continuous learning and iterative improvement. This involves regularly updating its safety protocols, conducting rigorous testing and validation of AI models, and fostering a culture of transparency and accountability.

Moreover, OpenAI recognizes the critical importance of public engagement and education. By demystifying AI technologies and openly communicating the measures taken to ensure their safe use, OpenAI seeks to build public trust and foster a collaborative environment. This approach not only enhances the legitimacy of OpenAI’s efforts but also encourages a broader societal discourse on the ethical implications of AI.

In conclusion, OpenAI’s long-term commitment to AI safety is both a strategic imperative and a moral obligation. By continuously refining its safety protocols and engaging with a wide range of stakeholders, OpenAI is dedicated to navigating the complexities of artificial intelligence responsibly and ethically. As the organization advances in its mission, its steadfast focus on safety will remain central to its endeavors, ensuring that the benefits of AI are realized in a manner that is safe, transparent, and aligned with societal values.