The Ethics of AI: Balancing Innovation and Responsibility

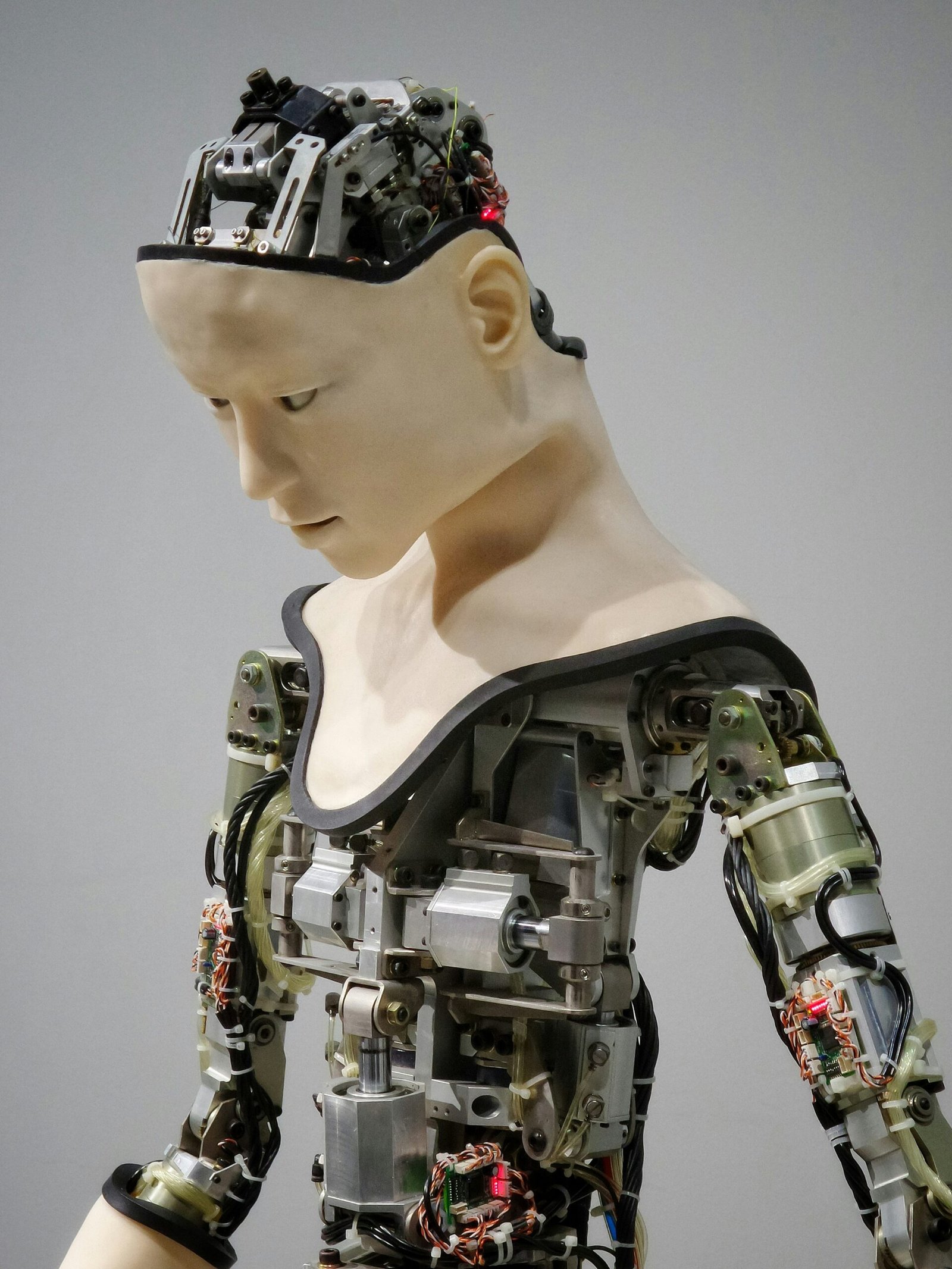

Photo by Google DeepMind on Unsplash

Introduction to AI Ethics

The rapid evolution of artificial intelligence (AI) technologies has transformed various aspects of human life, from healthcare to finance and beyond. As these innovations continue to proliferate, the ethical implications inherent in their application become increasingly significant. AI ethics emerges as a crucial framework for guiding the responsible development and deployment of these technologies, ensuring that advancements do not compromise fundamental values or societal norms.

The concept of AI ethics encompasses a broad range of principles and concerns, including fairness, accountability, transparency, and privacy. With AI systems capable of making decisions that can profoundly impact lives, the need for a robust ethical framework is paramount. For instance, biases in algorithmic decision-making can perpetuate existing societal inequalities, leading to adverse outcomes for marginalized groups. Thus, a proactive approach to addressing these ethical concerns is essential to cultivate public trust in AI systems.

Moreover, the fast pace of innovation raises critical questions surrounding responsibility and oversight. As AI technologies become more autonomous, determining who is accountable for their actions poses a significant challenge. Ethical guidelines must therefore evolve in tandem with technological advancement to provide clarity on issues such as liability, consent, and the moral ramifications of AI decisions. Additionally, the potential for misuse of AI technology further underscores the importance of establishing a solid ethical foundation.

Recognizing the need for a balanced approach, stakeholders—ranging from developers to policymakers and the public—must engage in meaningful dialogue to devise effective strategies that harmonize innovation with ethical responsibility. This section sets the stage for exploring the complex interplay between technological advancement and ethical considerations, facilitating a deeper understanding of how to navigate this critical juncture in AI development.

Historical Context of AI Development

The history of artificial intelligence (AI) spans several decades, beginning in the mid-20th century when researchers began to explore the possibility of creating machines capable of simulating human intelligence. The term “artificial intelligence” was first coined in 1956 during the Dartmouth Conference, organized by prominent figures such as John McCarthy, Marvin Minsky, and Claude Shannon. This pivotal moment marked the beginning of serious AI research and development, signaling a shift in how computers could be utilized to solve complex problems.

Throughout the 1960s and 1970s, AI experienced significant milestones, including the development of early algorithms and the creation of the first AI programs capable of playing games like chess. However, despite early optimism, the field encountered major challenges, leading to what is known as the “AI winter” in the 1980s, characterized by decreased funding and interest due to unmet expectations. This period highlighted the limitations of early AI systems and contributed to a recalibration of societal expectations surrounding artificial intelligence.

In the 1990s and 2000s, advancements in computing power and the advent of machine learning revitalized interest in AI. Pioneering technologies such as neural networks and natural language processing emerged, transforming how machines could understand and interpret data. This resurgence paved the way for AI applications to permeate everyday life, from virtual assistants to recommendation systems. However, as AI began to integrate more seamlessly into society, ethical considerations surrounding its use also intensified, influenced by past mistakes and public concern over privacy, bias, and accountability.

Reflecting on the historical context of AI development reveals how far the technology has come while underscoring the necessity of addressing ethical implications. Lessons learned from previous periods of stagnation and misunderstanding continue to inform current discussions about responsible innovation in AI, emphasizing the importance of maintaining a balance between technological advancement and ethical responsibility.

Key Ethical Principles in AI

The rapid advancement of artificial intelligence (AI) technologies necessitates a framework of ethical principles to ensure responsible development and deployment. Among these principles are transparency, accountability, fairness, and privacy, each playing an essential role in guiding AI practices.

Transparency involves clear communication regarding how AI systems function, the data they utilize, and the decision-making processes they engage in. For instance, when an AI model is employed in healthcare, it is crucial for medical professionals and patients alike to understand the basis of the AI’s recommendations. This understanding promotes trust and facilitates informed decisions, helping to mitigate any fears associated with the potential opacity of advanced technologies.

Accountability is another vital ethical principle, denoting the responsibility of developers and organizations to ensure that their AI systems operate safely and do not cause harm. Companies must be prepared to address issues arising from their AI technologies, whether it’s through bias in decision-making or unintended consequences from automated processes. An example of accountability can be found in the automotive industry, where manufacturers are increasingly held responsible for the safe operation of AI-driven vehicles. By establishing clear lines of accountability, stakeholders can foster a culture of safety and vigilance in AI development.

Fairness pertains to the equitable treatment of individuals by AI systems, minimizing bias and discrimination. It is essential to ensure that AI applications in job recruitment or loan approvals do not propagate existing societal inequalities. For instance, implementing diverse training datasets can help create AI models that are more representative, thus enhancing fairness and inclusivity in their outcomes.

Finally, privacy is a cornerstone of ethical AI, safeguarding individuals’ personal information. AI systems must adhere to data protection mechanisms that respect user consent and confidentiality. Companies should adopt privacy-focused approaches, such as data anonymization, to prevent unauthorized access to sensitive information. Upholding these ethical principles will ultimately create a responsible AI ecosystem that aligns with societal values.

The Role of Stakeholders in AI Ethics

The development and deployment of artificial intelligence (AI) technologies involve a diverse array of stakeholders, each with unique responsibilities in upholding ethical standards. Key participants in this ecosystem include developers, companies, consumers, and regulatory bodies. Each group plays a vital role in ensuring that AI solutions are both innovative and responsible.

Developers, often seen as the architects of AI, are tasked with creating algorithms that not only function effectively but also align with ethical principles. This responsibility extends to incorporating transparency and fairness into their code, potentially involving rigorous testing to prevent biases. The understanding of ethical implications during the development phase is crucial, as overlooking these considerations can result in harmful consequences for society as a whole.

Companies that deploy AI systems also have a significant role in monitoring and enforcing ethical practices. Businesses must prioritize ethics in their corporate culture, establishing guidelines that dictate how AI technologies should be used. This includes implementing protocols for data privacy, accountability, and sustainability. Additionally, companies should actively engage with stakeholders to understand their concerns and expectations, fostering trust and transparency in their AI initiatives.

Consumers are equally important within this framework. As users of AI technologies, they have the right to understand how these systems operate and the intentions behind their implementation. By voicing their opinions and concerns, consumers can influence AI strategies and promote ethical standards that align with societal values.

Finally, regulatory bodies serve a critical function in establishing the legal frameworks that govern AI. They are responsible for crafting policies that address the ethical implications of AI technologies while ensuring accountability and transparency. Collaboration among developers, companies, consumers, and regulatory entities is essential for effective decision-making, ultimately leading to a balanced approach that prioritizes ethical considerations throughout the AI lifecycle.

Case Studies: AI Ethics in Action

Exploring the realm of artificial intelligence (AI) ethics through real-world examples provides critical insights into the challenges and successes associated with AI implementation. One prominent case study is that of Google’s DeepMind and its application to healthcare. By utilizing AI algorithms to diagnose eye diseases, DeepMind demonstrated how technology can significantly improve patient care and outcomes. The ethical considerations in this scenario revolved around data privacy and patients’ consent. DeepMind ensured compliance with ethical guidelines by employing robust data anonymization techniques and obtaining informed consent from participants. This instance not only highlights the potential benefits of AI in healthcare but also emphasizes the importance of maintaining ethical standards in AI deployment.

Conversely, the controversial deployment of facial recognition technology brings to light the problematic aspects of AI ethics. The city of San Francisco implemented a ban on facial recognition software due to its disproportionate impacts on marginalized communities. Reports indicated that the technology exhibited greater inaccuracies for people of color, leading to concerns of potential bias and discrimination. This case underscores the necessity of scrutinizing AI tools and their societal implications, particularly regarding fairness and accountability. Failing to address these ethical concerns may result in serious ramifications for both individuals and communities.

Another illustrative case is the use of AI driven algorithms in hiring processes. Companies that have adopted AI for candidate screening have encountered ethical dilemmas regarding bias. If the training data is skewed or non-representative, the AI could inadvertently perpetuate existing biases in hiring. This scenario has prompted businesses to reassess their AI systems, advocating for transparency and fairness in recruitment practices. Consequently, organizations are increasingly collaborating with experts to ensure their algorithms are designed to promote diversity and equality.

These case studies underscore the complexity of AI ethics in action, revealing both the immense potential of technology and the ethical pitfalls that can arise without careful consideration and implementation. The lessons derived from these examples will guide future AI development, reinforcing the need for a balanced approach that prioritizes innovation while upholding responsibility.

Challenges in Implementing Ethical AI

The implementation of ethical AI practices presents numerous challenges that organizations encounter as they strive to balance innovation with responsibility. One significant challenge is the complexity of establishing universal ethical standards that can be widely accepted across various industries and geographical regions. Different cultures and regulatory environments often lead to divergent views on what constitutes ethical behavior, complicating efforts to develop a cohesive framework that can govern AI technologies on a global scale.

Moreover, organizations often find themselves in a dilemma between pursuing competitive advantages and adhering to ethical considerations. The tech landscape is highly competitive, with firms seeking to leverage AI for better market position. This drive can sometimes lead to ethical compromises, as businesses prioritize speed and performance over responsible practices. The pursuit of efficiency in AI development can unintentionally sideline the critical examination of associated ethical implications, leading to the proliferation of biased, unfair, or harmful algorithms.

Another layer of complexity arises from navigating existing legal frameworks. In many regions, AI regulations are still evolving, and there is often a lack of clarity regarding compliance and best practices. Organizations may struggle to understand their legal obligations surrounding data privacy, algorithm transparency, and accountability. This unclear legal landscape can hinder the adoption of ethical AI practices, as companies may fear legal repercussions or public backlash if they misinterpret compliance requirements.

These challenges persist due to the rapid pace of technological advancements, which often outstrips the ability of ethical frameworks and regulatory solutions to adapt. Strategies for overcoming these challenges include fostering collaboration among stakeholders—such as policymakers, ethicists, and technologists—to co-create ethical guidelines that align with both societal needs and business objectives. In addition, organizations can invest in training programs that emphasize ethical decision-making to foster a culture of responsibility within the AI development process.

Regulatory Frameworks for Ethical AI

The rapid advancement of artificial intelligence (AI) technologies has prompted the need for comprehensive regulatory frameworks that promote ethical AI development. Governments, industry leaders, and international organizations are increasingly recognizing the importance of establishing guidelines and policies that prioritize accountability, transparency, and fairness in AI systems. Various existing frameworks have been proposed and implemented aiming to mitigate potential risks associated with AI, while fostering innovation.

In the United States, regulatory bodies such as the Federal Trade Commission (FTC) have begun issuing guidelines that emphasize the ethical use of AI, focusing on consumer protection and data privacy. These guidelines aim to ensure that AI applications are designed vigorously to prevent discrimination and bias. Moreover, the U.S. National Institute of Standards and Technology (NIST) has developed a framework for managing AI risks, emphasizing the importance of reliability, safety, and user trust in AI systems.

Across the Atlantic, the European Union has taken a more proactive approach by proposing the AI Act. This regulation aims to establish clear standards for AI applications, categorizing them based on risk levels, ranging from minimal to unacceptable risks. The AI Act emphasizes the need for compliance with ethical norms, including human oversight, data governance, and transparency. By holding developers and users of AI systems to account, this regulation encourages safe and responsible AI integration into society.

Internationally, organizations like the Organisation for Economic Co-operation and Development (OECD) and the United Nations (UN) have initiated discussions around ethical AI principles to foster global cooperation. These frameworks aim to harmonize efforts across borders while addressing challenges posed by AI that do not adhere to ethical norms.

While these frameworks are steps in the right direction, there remains a pressing need for continuous evaluation and refinement. Ensuring effective compliance and adaptability to evolving technologies will be crucial to uphold ethical standards. Strengthening collaboration between governments, industries, and civil society can further enhance the integrity of AI development globally.

Future of AI Ethics: Trends and Predictions

The future of artificial intelligence (AI) ethics is poised to evolve significantly as technology advances and societal implications become more pronounced. One emerging trend is the establishment of AI ethics committees within organizations. These committees aim to oversee AI developments, ensuring that ethical considerations are integrated into the design and deployment of AI systems. By fostering interdisciplinary collaboration, these groups can provide a balanced perspective that includes considerations of fairness, accountability, and transparency.

Another notable development on the horizon is the advancement of ethical AI tools. Innovations in this area are focusing on creating algorithms that embody ethical principles and reduce biases in decision-making processes. Solutions such as explainable AI enable users to understand the rationale behind AI-driven decisions, thus fostering trust and accountability. The integration of ethical frameworks into machine learning algorithms will likely become standard practice, allowing organizations to navigate complex ethical dilemmas more effectively.

As AI continues to permeate various sectors, from healthcare to finance, its role in society is evolving. Governments and regulatory bodies will need to adapt policies to address the rapid pace of AI development. Ensuring that these policies prioritize ethical considerations will be crucial in mitigating potential societal risks associated with AI deployment. Furthermore, public discourse surrounding AI ethics is expected to intensify, leading to greater awareness and engagement among stakeholders, including consumers, professionals, and policymakers.

In this dynamic landscape, the interplay between innovation and responsibility will shape the future of AI ethics. As organizations increasingly recognize the importance of ethical AI, a collaborative effort involving diverse stakeholders will be essential. Continued dialogue, research, and the development of ethical standards will guide the responsible advancement of AI technologies, promoting a future where innovation aligns with societal values and ethical principles.

Conclusion: Striking the Right Balance

The rapid advancement of artificial intelligence (AI) presents both significant opportunities and imminent challenges. As discussed, it is essential to navigate the complex landscape of innovation while maintaining a strong commitment to ethical practices. The balance between leveraging technological progress and upholding responsibility cannot be understated; each has a vital role in shaping a future that is not only efficient but also equitable.

Throughout this blog post, we have explored the various dimensions of AI ethics, including the importance of transparency, accountability, and fairness in AI systems. Developers, policymakers, and businesses must work collaboratively to establish frameworks that ensure responsible usage of AI. The integration of ethical considerations into AI design is crucial. Equally, cultivating awareness around the societal implications of AI can help mitigate risks and foster a culture of integrity.

Moreover, as individuals, we all have a role in advocating for these responsible practices. Engaging in dialogues about AI ethics within our communities can encourage a collective push toward accountability in AI development. Whether through social platforms, local workshops, or academic discussions, fostering awareness and understanding of the ethical challenges posed by AI is vital. The responsibility aligns with not only those directly involved in AI creation but also those who engage in its application. It is imperative that discussions around ethics become commonplace in all circles, promoting a future where innovation is harmoniously balanced with responsibility.

In conclusion, the dialogue surrounding AI ethics is far from over. As technology evolves, so too must our understanding and commitment to responsible innovation. We must remain vigilant and proactive in our approach, striving to ensure that the benefits of AI are realized without compromising ethical integrity. Together, we can lay the groundwork for a more responsible and equitable technological landscape.